Is AI literally all about algorithms?

In many discussions of artificial intelligence (AI) it’s almost as if many — or even all — AI theorists and workers in the field believe that disembodied algorithms and computations alone can in and of themselves bring about mind, consciousness and understanding. (The same can be said, though less strongly, about the functions of the functionalists.) This (implicit) position — as the philosopher John Searle once argued (more of which later) - is a kind of contemporary dualism in which abstract objects (i.e., computations and algorithms) bring about mind, consciousness and understanding on their own. Abstract algorithms, then, may well have become the contemporary version of Descartes’ mind-as-a-non-physical-substance — at least according to Searle.

It’s hard to even comprehend how anyone could believe that an algorithm or computation alone could be a candidate for possession of (or capable of bringing about) a (to use Roger Penrose’s example) conscious state or consciousness itself. (Perhaps no one does believe that.) In fact it’s hard to comprehend what that could even mean. Yet when you read (some/much) AI literature, that appears to be exactly what various theorists and workers in the field believe.

Of course no AI theorist would ever claim that his (as it were)Marvellous Algorithms don’t actually need to be implemented. Yet if the material (or nature) of the implementation is irrelevant, then isn’t implementation in toto irrelevant too?

So if we do have the situation of AI theorists emphasising algorithms or computations at the expense of literally everything else, then this ends up being, as Roger Penrose will argue later, a kind of Platonism (or abstractionism) in which implementation is either, at best, secondary; or, at worst, completely irrelevant.

Another angle on this issue is to argue that it’s wrong to accept this algorithm-implementation “binary opposition” in the first place. This means that it isn’t at all wrong to concentrate on algorithms. It’s just wrong to completely ignore the “physical implementation” side of things. Or, as the philosopher Patricia Churchland once stated, it’s wrong to completely “ignore neuroscience” (i.e., brains and biology) and focus entirely on algorithms, functions, computations, etc.

So, at best, surely we need the (correct) material implementation of such abstract objects.

AI’s Platonic Algorithms?

Let me quote a passage in which the mathematical physicist and mathematician Roger Penrose (1931-) raises the possibility that strong artificial intelligence theorists are implicitly committed to at least some kind of Platonism.

[Strong AI is defined in various different ways. Philosophers tend to define it in one way and AI theorists in another way. See artificial general [i.e., strong] intelligence.]

Firstly, Penrose raises the issue of the physical “enaction” and implementation of a relevant algorithm:

“The issue of what physical actions should count as actually enacting an algorithm is profoundly unclear.”

Then this problem is seen to lead — logically — to a kind of Platonism. Penrose continues:

“Perhaps such actions are not necessary at all, and to be in accordance with view point A, the mere Platonic mathematical existence of the algorithm would be sufficient for its ‘awareness’ to be present.”

Of course no AI theorist would ever claim that even a Marvellous Algorithm doesn’t need to be implemented and enacted at the end of the day. In addition, he’d probably scoff at Penrose’s idea that the “the mere Platonic mathematical existence of the algorithm would be sufficient for its ‘awareness’ to be present”.

Yet surely Penrose has a point.

If it’s literally all about algorithms (which can be — to borrow a term from the philosophy of mind - multiply realised), then why can’t the relevant algorithms do the required job entirely on their own? That is, why don’t these abstract algorithms automatically instantiate consciousness (to be metaphorical for a moment) while floating around in their abstract spaces?

In any case, Penrose’s position can be expressed in very simple terms.

If the strong AI position is all about algorithms, then literally any implementation of a Marvellous Algorithm (or Set of Magic Algorithms) would bring about consciousness and understanding.

More specifically, Penrose focuses on a single qualium. (The issue of the status, existence and reality of qualia will be ignored this piece.) He writes:

“Such an implementation would, according to the proponents of such a suggestion, have to evoke the actual experience of the intended qualium.”

If the precise hardware doesn’t at all matter, then only the Marvellous Algorithm matters. Of course the Marvellous Algorithm would need to be implemented… in something. Yet this may not be the case if we follow the strong AI position to its logical conclusion. At least this conclusion can be drawn out of Penrose’s own words.

So now let’s tackle the actual implementation of Marvellous Algorithms.

The Implementation of Algorithms

Roger Penrose states that

“[s]uch an implementation [of a “clear-cut and reasonably simple algorithmic suggestion”] would, according to the proponents of such a suggestion, have to evoke the actual experience of the intended qualium”.

It’s hard to tell what that ostensible AI position could even mean. Of course that’s only if Penrose is being fair to AI theorists in his account. In other words, surely it can’t possibly be the case that an implementation (or “enaction”) of any algorithm (even if complex rather than “simple”) could in and of itself “evoke” an actual experience or evoke anything at all — a qualium or otherwise. Again, it’s hard to understand what all that could mean.

Of course if we accept that the human brain does implement algorithms and computations, then our fleshy “hardware” (or wetware) already does evoke consciousness.

So are we talking about a single algorithm here? Perhaps. However, even if multiple algorithms (which are embedded — or part of — a larger algorithm) are being discussed, implementation — or at least implementation alone — still can’t be the whole story. The whole story must surely depend on the nature of the hardware (whether non-biological, biological or otherwise), how the algorithms are actually implemented and on many other (non-abstract) factors.

There’s another problem with what Penrose says (which may just be one of his wording) when he writes the following:

“It would be hard [] to accept seriously that such a computation [] could actually experience mentality to any significant degree.”

Surely AI theorists don’t argue that a relevant algorithm (or set of algorithms) “could actually experience mentality”: they argue that such an algorithm brings about, causes or whatever “mentality”. How on earth, in other words, could an abstract entity — an algorithm — actually experience mentality? If anything, this is a category mistake on Penrose’s part.

Of course Penrose might have simply meant this:

algorithm + implementation = the experience of mentality

Yet even here there there are philosophical problems.

Firstly, what would it be that (to use Penrose’s words again) “experiences mentality”? The algorithm itself or the algorithm-implementation fusion? Could that fusion actually be an experience? Or would something else — such as a person or any physical entity — be needed to actually “have” (or instantiate) that experience?

As stated in the introduction, Roger Penrose and John Searle have accused (if that’s the right word) AI theorists of dualism and Platonism; and AI theorists have returned the favour by accusing Penrose and Searle of exactly the same isms.

So who exactly are the Platonists and dualists… and who are the physicalists?

Is AI Physicalist?

It’s ironic that Penrose should state (or perhaps simply hint) that his own position on consciousness is better described as “physicalist” (see physicalism) than the position of strong AI theorists. He puts this in the following passage:

“According to A, the material construction construction of a thinking device is regarded as irrelevant. It is simply the computation that it performs that determines all its mental attributes.”

Then comes another accusation of Platonism and indeed of dualism:

“Computations themselves are pieces of abstract mathematics, divorced from any association with particular material bodies. Thus, according to A, mental attributes are themselves things with no particular association with physical objects [].”

Thus Penrose seems to correctly conclude (though that’s only if we accept his take on what what AI theorists are implicitly committed to) by saying “so the term ‘physicalist’ might seem a little inappropriate” for this AI position. Penrose’s own position, on the other hand,

“demand[s] that the actual physical constitution of an object must indeed be playing a vital role in determining whether or not there is genuine mentality present in association with it”.

Of course all this (at least in regards both Penrose’s position and that of strong AI) seems like a reversal of terminology. Penrose himself recognises this and says that “such terminology would be at variance with some common usage” — certainly the common usage of many analytic philosophers.

In any case, the biologist and neuroscientist Gerald Edelman (1929–2014) takes a similar position to Penrose — at least when it comes to his emphasis on biology and the brain.

For example, Edelman once said that mind and consciousness

“can only be understood from a biological standpoint, not through physics or computer science or other approaches that ignore the structure of the brain”.

And then there’s the philosopher John Searle’s position.

Searle himself doesn’t spot an implicit Platonism in AI theory (as does Penrose): he spots an implicit dualism. Of course this is ironic because many AI theorists, philosophers and functionalists have accused Searle of being a “dualist” (see here).

Searle’s basic position is that if AI theorists, computationalists or functionalists dispute — or simply ignore — the physical and causal biology of brains and exclusively focus on syntax, computations/algorithms and functions, then that will surely lead to at least some kind of dualism. In other words, Searle argues that AI theorists and functionalists set up a radical disjunction between the actual physical (therefore causal) reality of the brain when they explain — or account for — intentionality, mind, consciousness and understanding.

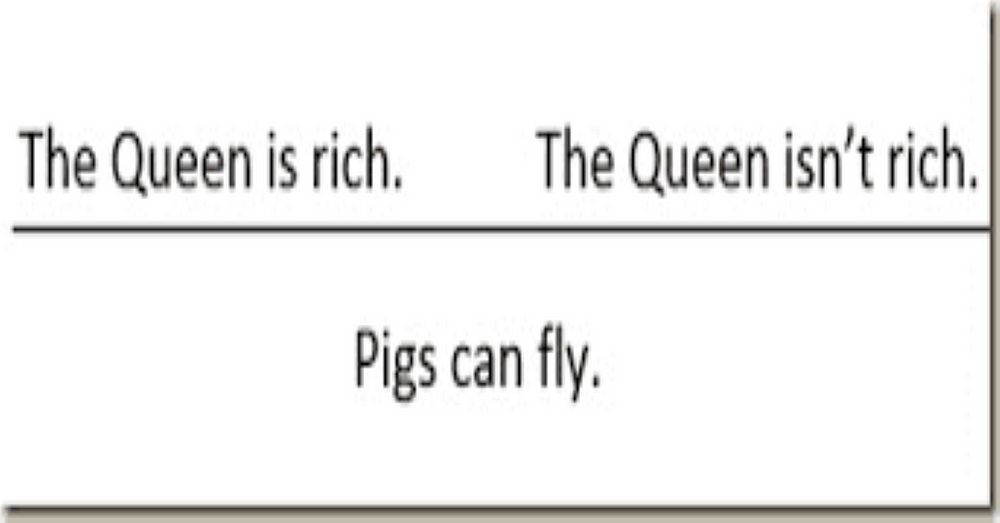

So Searle’s basic position on all this is stated in the following:

i) If Strong AI proponents ignore (or play down) the physical biology of brains; and, instead, focus exclusively on syntax, computations/algorithms or functions,

ii) then that will surely lead to some kind of dualism in which non-physical (i.e., abstract) objects play the role of Descartes’ non-physical (i.e., “non-extended”) mind.

Again: Searle is noting the radical disjunction which is set up between the actual physical reality of biological brains and how these philosophers, scientists and theorists actually explain — and account for — mind, consciousness and understanding.

We also have John Searle’s position as it’s expressed in the following:

“If mental operations consist of computational operations on formal symbols, it follows that they have no interesting connection with the brain, and the only connection would be that the brain just happens to be one of the indefinitely many types of machines capable of instantiating the program.”

Now for the dualism:

“This form of dualism is not the traditional Cartesian variety that claims that there are two sorts of substances, but it is Cartesian in the sense that it insists that what is specifically mental about the brain has no intrinsic connection with the actual properties of the brain. This underlying dualism is masked from us by the fact that AI literature contains frequent fulminations against ‘dualism’.”

Despite all the words above, Searle doesn’t believe that only biological brains can give rise to understanding and consciousness. Searle’s position is that — empirically speaking — only brains do give rise to understanding and consciousness. So he’s emphasising what he takes to be an empirical fact. That is, Searle isn’t denying the logical — and even metaphysical — possibility that other entities can bring forth minds, consciousness and understanding.

Roger Penrose on Abstract-Concrete Isomorphisms

Is there some kind of isomorphic relation between the (as it were) shape of the abstract algorithm and the shape of its concrete implementation? Penrose himself asks this question a little less metaphorically. He writes:

“Does ‘enaction’ mean that bits of physical material must be moved around in accordance with the successive operations of the algorithm?”

Yet surely this does happen in countless cases when it comes to computers. (In this case, electricity is being “moved around”, transistors are opened and shut, etc.) Or as David Chalmers puts it in the specific case of simulating (or even mimicking) the human brain:

“[I]n an ordinary computer that implements a neuron-by-neuron simulation of my brain, there will be real causation going on between voltages in various circuits, precisely mirroring patterns of causation between the neurons.”

So Penrose isn’t questioning these successful implantations and enactions in computers (how could he be?) but simply saying that material must matter. Alternatively, does Penrose believe that implementations and enactions don’t makes sense only when it comes to the singular case of consciousness?

The Australian philosopher David Chalmers (1966-) offers some help here.

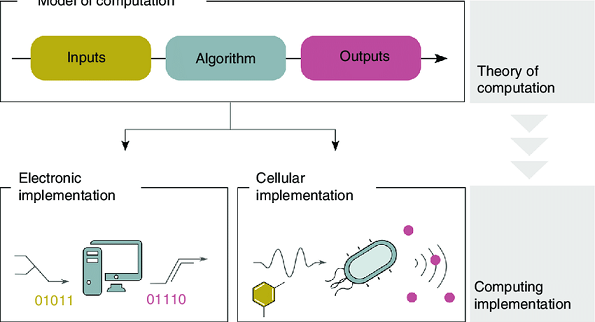

David Chalmers on Causal Structure

Take a recipe for a meal.

To David Chalmers, the recipe is a “syntactic object”. However, the meal itself (as well as the cooking process) is an “implementation” which occurs in what he calls the “real world”.

Chalmers also talks about “causal structure” in relation to programmes and their physical implementation. Thus:

“Implementations of programs, on the other hand, are concrete systems with causal dynamics, and are not purely syntactic. An implementation has causal heft in the real world, and it is in virtue of this causal heft that consciousness and intentionality arise.”

Then Chalmers delivers his clinching line:

“It is the program that is syntactic, it is the implementation that has semantic content.”

More clearly, a physical machine is deemed to belong to the semantic domain and a syntactic programme is deemed to be abstract. Thus a physical machine is said to provide a “semantic interpretation” of the abstract syntax.

Yet how can the semantic automatically arise from an implementation of that which is purely abstract and syntactic?

Well… that depends.

Firstly, it may not automatically arise. And, secondly, it may depend on the nature of the implementation as well as physical material used for the implementation.

To go back to Roger Penrose’s earlier words on “enaction”. He asked this question:

“Does ‘enaction’ mean that bits of physical material must be moved around in accordance with the successive operations of the algorithm?”

And it’s here that — in Chalmers’ case at least — we arrive at the importance and relevance of “causal structure”.

So it’s not only about implementation: it’s also about the fact that any given implementation will have a certain causal structure. And, according to Chalmers, only certain (physical) causal structures will (or could) bring forth consciousness, mind and understanding.

Does the physical implementation need to be (to use a word that Chalmers himself uses) an “isomorphic” kind of mirroring (or a precise “mapping”) of the abstract? And if it does, then how does that (in itself) bring about the semantics?

One can see how vitally important causation is to Chalmers when he says that “both computation and content should be dependent on the common notion of causation”. In other words, an algorithm or computation and a given implementation will share a causal structure. Indeed Chalmers cites the example of a Turing machine when he says that “we need only ensure that this formal structure is reflected in the causal structure of the implementation”.

Chalmers continues:

“Certainly, when computer designers ensure that their machines implement the programs that they are supposed to, they do this by ensuring that the mechanisms have the right causal organization.”

In addition, Chalmers tells us what a physical implementation is in the simplest possible terms. And, in his definition, he again refers to “causal structure”:

“A physical system implements a given computation when the causal structure of the physical system mirrors the formal structure of the computation.”

Then Chalmers goes into more detail:

“A physical system implements a given computation when there exists a grouping of physical states of the system into state-types and a one-to-one mapping from formal states of the computation to physical state-types, such that formal states related by an abstract state-transition relation are mapped onto physical state-types related by a corresponding causal state-transition relation.”

Chalmers also stresses the notion of (correct) “mapping”. And what’s relevant about mapping is that the “causal relations between physical state-types will precisely mirror the abstract relations between formal states”. Moreover:

“What is important is the overall form of the definition: in particular, the way it ensures that the formal state-transitional structure of the computation mirrors the causal state-transitional structure of the physical system.”

Chalmers also states the following:

“While it may be plausible that static sets of abstract symbols do not have intrinsic semantic properties, it is much less clear that formally specified causal processes cannot support a mind.”

In Chalmers’ account, then, the (causal) concrete does appear to be vital in that the “computational descriptions are applied to physical systems [because] they effectively provide a formal description of the systems’ causal organisation”.

So what is it, exactly, that’s being described?

According to Chalmers, the physical (or concrete) “causal organisation” is being described. And when described, it becomes an “abstract causal organisation”. (Is the word “causal” at all apt when used in conjunction with what is abstract?) However, the causal organisation is abstract in the sense that all peripheral non-causal and non-functional aspects of the physical system are simply factored out. Thus all we have left is an abstract remainder. Nonetheless, it’s still a physical (or concrete) system that provides the (as it were) input and an abstract causal organisation (captured computationally) that effectively becomes the output.

Chalmers develops his theme. He writes:

“It is easy to think of a computer as simply an input-output device, with nothing in between except for some formal mathematical manipulations.”

However:

“This was of looking at things [] leaves out the key fact that there are rich causal dynamics inside a computer, just as there are in the brain.”

Chalmers has just mentioned the human brain. Indeed he discusses the “mirroring” of the brain in non-biological physical systems. Yet many have argued that the mere mirroring of the human brain defeats the object of AI. However, since this raises its own issues and is more particular than the prior discussion about the relation between abstract algorithms and their concrete implementations, nothing more will be said about the brain here.

Conclusion

As stated in the introduction, it’s of course the case that most — or perhaps all — adherents of Strong AI would never deny that their abstract objects (i.e., algorithms and computations) need to be implemented in the (to use Chalmers’ words) “physical world”. That said, the manner of that implementation (as well as the nature of the physical material which does that job) seems to be seen as almost — or even literally — irrelevant to them. It’s certainly the case that brains and biology are often played down. (There are AI exceptions to this.)

Yet it must be said that not a single example of AI success has been achieved without implementation. Indeed that seems like a statement of the blindingly obvious! However, Roger Penrose, John Searle and David Chalmers are focussing on mind, consciousness and understanding and tying such things to biology and brains. So even though innumerable algorithms have been successfully implemented in innumerable physical/concrete ways (ways which we experience many times in our everyday lives — from our laptops to scanning devices), when it comes to mind, consciousness and understanding (or “genuine intelligence” in Penrose’s case), things may be very different. In other words, there may be fundamental reasons as to why taking a Platonic position (as, arguably, most AI theorists and workers do) on algorithms and computations will come up short when it comes to consciousness, mind and understanding.

Penrose particularly stresses the biology of consciousness in that he notes the importance of such things as microtubules and (biologically-based) quantum coherence. Chalmers stresses causal structure. (He doesn’t tie causal structure exclusively to human brains; though he does believe — like Patricia Churchland — that many AI theorists ignore it.) And Searle most certainly does stress brains, biology and causation.

And now it must be stated that Chalmers, Penrose and Searle don’t actually deny the possibility that understanding and consciousness may be successfully instantiated by artificial entities in the future. What they do is offer their words of warning to AI theorists and workers in the field.

So, to finish off, let me quote a passage from Penrose in which he provides some hope for AI theorists — though only if they take on board the various fundamental facts (as he sees them) about animal brains. Penrose writes:

“[I]t should be clear [] that I am by no means arguing that it would be necessarily impossible to build a genuinely intelligent device [].”

But then comes large but (or qualification):

“[S]o long as such a device were not a ‘machine, in the specific sense of being computationally controlled. Instead it would have to incorporate the same kind of physical action that is responsible for evoking our own awareness. Since we do not yet have any physical theory of that action, it is certainly premature to speculate on when or whether such a putative device might be constructed.”

As can quickly be seen, Penrose’s hope-for-AI may not in fact amount to much — at least not if one accepts Penrose’s own arguments and positions.

So whatever the case is, Penrose, Chalmers and Searle argue — in their own individual ways — that biology, brains and causation are indeed important when it comes to strong (i.e., not weak) artificial intelligence.