On the American and British pragmatists’ reaction against formal and mathematical logic in the late 19th and early 20th centuries.

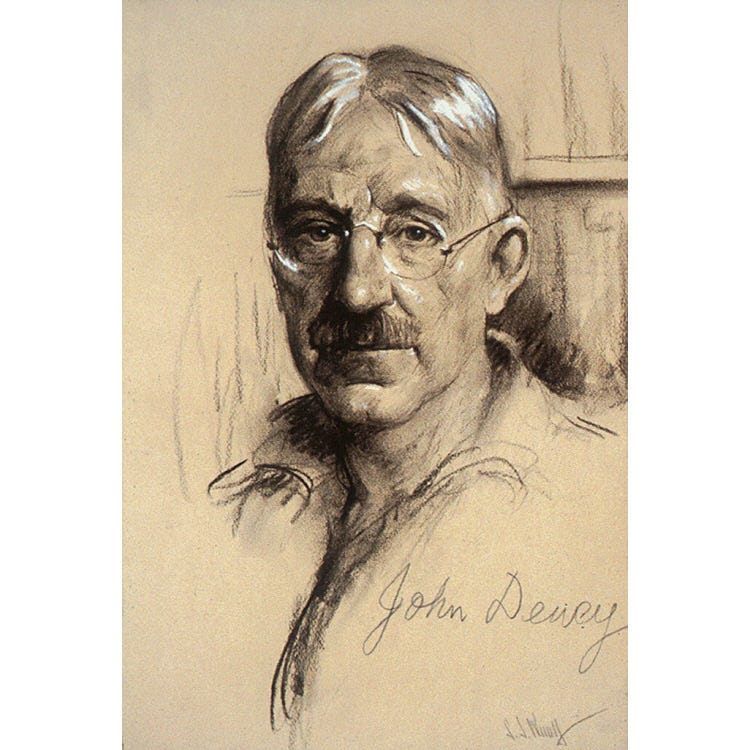

The German-British philosopher F.C.S. Schiller (1864–1937) argued that logic is — or at least should be — a “theory of inquiry”. (This position is captured in Schiller’s book, Logic for Use.) In America and, to a lesser degree, in the United Kingdom, such a view was in the air from roughly the 1870s to the 1920s… and onward (see here). Indeed the American philosopher and pragmatist John Dewey (there’s more on Dewey in the final section) wrote a book called Logic — The Theory of Inquiry. (Dewey was influenced by Schiller; just as Schiller was, in turn, influenced by C.S. Peirce.)

So if logic is a theory of inquiry, then that means that it will be — primarily — an inquiry into human thought processes rather than into the formal relations which exist between logical symbols and statements. In addition, one prime pursuit of this kind of logic was to understand how people actually “fall into error”. Its concern, therefore, wasn’t such things as system-based validity, entailment, consistency, etc. And that was because — so these pragmatists believed — these things don’t depend on (as it were) empirical or observational truth — even if they do depend on soundness.

Schiller also argued that science simply isn’t as logical as many people assume. (Ironically, there was much that went under the description the logic of science written after Schiller died.) Moreover, logical techniques and logical methodologies, in Schiller’s eyes, aren’t fool-proof. Schiller also argued (perhaps obviously) that logic alone can’t solve all the problems that bedevil scientific experiment and research.

More specifically, Schiller argued that scientists simply can’t know (as the phrase has it) a priori about all the possible (what Schiller called) “unforeseen objections” (i.e., to their theories or experiments) which may appear on the scene in the future. In other words, there’s simply no way that these future possibilities can be known (or preempted) beforehand. And it’s not only these possible objections which are unforeseen; but also new physical conditions or experimental findings.

So scientists can’t logically (or fully) predict any future changes to science.

Now consider, for example, the impossibility of scientists predicting quantum phenomena in, say, the 18th century. And think also of all the other “revolutions” in 20th century science which could never have been predicted or preempted.

In terms of logic itself.

Consider the rise of many-valued logic. Consider the attacks — or at least questionings or revisions — of the Law of Excluded Middle. (The American philosopher W.V.O. Quine became a pragmatist about the Law of Excluded Middle — or at least its applicability - in response to the findings of quantum mechanics and the results of various scientific experiments — see here.)

Hardly any of these radical scientific and logical changes could have been prophesised in the (as mentioned) 18th century or even in the first half of the 19th century.

A traditional empiricist might also have argued that many of these scientific (if not logical) revolutions couldn’t have been predicted if such predictions, by their very nature, were simply — or at least partly — dependent on past and present experiences or observations. And because such things weren’t observed in the 18th century, then it follows that they couldn’t have been predicted either. This means that no matter how strong or speculative the prediction actually is, it should — or would — still at least partly depend on empirical data or observations. And if such things weren’t available to point in the direction of, say, quantum phenomena again, then such phenomena couldn’t even have been speculated about.

Of course it might have been the case that certain speculators’ predicted such scientific revolutions by (as it were) accident. Indeed in the philosophy of science much is made of scientists, philosophers and even lunatics getting things right — yet for the wrong reasons. So, in broad terms, some people have made wild leaps in the dark and still got things right. However, even if such speculators got things right, then few — if any — scientists (of their day) would have taken much — or any — notice of them. That primarily because such speculative theories (or ideas) would have been completely divested of any observational or experimental foundation or content.

Of course even the scientists who were strict sceptics would never have demanded complete and unequivocal justifications of the speculators’ predictions or hypotheses. Indeed scientists have always realised that speculations are vital in science. That said, such scientists would at least have expected the aforementioned speculations (or theories) to have had at least (as it were) one foot on the ground. In other words, such speculations should never entirely belong to another world.

A Little More on John Dewey

In John Dewey’s eyes, logic should be largely derived from the methods and practices which are used in science and by everyday people — even those people who aren’t experts. And because science is always on the move, then Dewey also believed (as expressed in his Essays on Experimental Logic) that logicians shouldn’t see logical principles as

“eternal truths which have been laid down once and for all as supplying a pattern of reasoning to which all inquiry must conform”.

More generally, Dewey expressed his naturalist position on logic (or at least on “thinking”) in this way:

“[T]hinking, or knowledge-getting, is far from being the armchair thing it is often supposed to be. The reason it is not an armchair thing is that it is not an event going on exclusively within the cortex or the cortex and vocal organs. It involves the explorations by which relevant data are procured and the physical analyses by which they are refined and made precise.”

So Dewey believed (like C.S. Peirce before him) that logic should be fallibilist in nature — just like science. Indeed his fellow American philosopher W.V.O. Quine (as already mentioned) was even a fallibilist when it came to both mathematics and logic.

Dewey also (if indirectly) argued that the arrow should point from the world to logic; as well as from science to logic. In that sense, then, logic seemed to take a back seat when it came to science.

Moreover, because science’s relation to the world is both more (as it were) direct than logic’s and philosophy’s, then the latter two (to use Quine’s words) “should defer to science”. Alternatively and to use a term used in the 20th century, logic and philosophy should be naturalised so that they don’t systematically conflict with science and its findings.

So Dewey clearly took a pragmatist line on logic.

Dewey noted the many successes (however defined) of science and everyday life. He therefore believed that whichever logical rules or principles scientists and everyday people use when they scored their particular successes, that logicians should also adopt them. In any case, scientists themselves have always taken on board new logical methods in their pursuits. And Dewey believed that logicians should do so too.

No comments:

Post a Comment