In a purely logical argument, even if the premises aren’t in any way (semantically) connected to the conclusion, the argument may still be both valid and sound.

Professor Edwin D. Mares displays what he sees as a problem with purely formal logic when he offers us the following example of a valid argument:

The sky is blue.

-------------------------------------------------------------------------------------------------------

∴ there is no integer n greater than or equal to 3 such that for any non-zero integers x, y, z, xn = yn + zn.

Edwin Mares says that the above “is valid, in fact sound, on the classical logician’s definition”. It’s the argument that is valid; whereas the premise and conclusion are sound (i.e., true). In more detail, the

“premise cannot be true in any possible circumstance in which the conclusion is false”.

Clearly the content of the premise isn’t semantically — or otherwise — connected to the content of the conclusion. However, the argument is still valid and sound.

That said, it’s not clear from Edwin Mares’ symbolic expression above if he meant this: “If P, therefore Q. P. Therefore Q.” That is, perhaps the premise “The sky is blue” with a line under it, followed by the mathematical statement, is used as symbolic shorthand for an example of modus ponens which doesn’t have a sematic connection between P and Q. In other words, Mares’ “P, therefore Q” isn’t (really) an argument at all. However, if both P and Q are true, then, logically, they can exist together without any semantic connection and without needing to be read as shorthand for an example of modus ponens.

Whatever the case is, what’s the point of the “The Sky is blue” example above?

Perhaps no logician would state it for real. He would only do so, as Mares himself does, to prove a point about logical validity. However, can’t we now ask why it’s valid even though the premise and conclusion are true?

Perhaps showing the bare bones of the “The sky is blue” example will help. Thus:

P

∴ Q

Does that look any better? Even though we aren’t given any semantic content, both P and Q must have a truth-value. (In this case, both P and Q are true.) It is saying: P is true. Therefore Q is true. The above isn’t saying: Q is a consequence of P. (Or: P entails Q.) Basically, we’re being told that two true and unrelated statements can (as it were) exist together — as long as they don’t contradict each another. (Or on the aforementioned alternative reading: “If P is true; then Q is true. P is true. Therefore Q is true.”)

So there are cases in which the premises of an argument are all true, and the conclusion is also true; and yet as Professor Stephen Read puts it:

“[T]here is an obvious sense in which the truth of the premises does not guarantee that of the conclusion.”

Ordinarily the truth of the premises is meant to “guarantee” the truth of the conclusion. So let’s look at Read’s own example:

i) All cats are animals

ii) Some animals have tails

iii) Therefore some cats have tails.

Clearly, premises i) and ii) are true. Indeed iii) is also true. (Not all cats have tails. And, indeed, according to some logicians, “some” also implies “all”.)

So why is the argument above invalid?

It’s invalid not because of the assigned truth-values of the premises and the conclusion; but for another reason. The reason is that the sets used in the argument are (as it were) mixed up. Thus we have the distinct sets [animals], [cats] and [animals which have tails].

It doesn’t logically follow from “some animals have tails” that “some cats have tails”. If some animals have tails it might have been the case that cats are animals which don’t have tails. Thus iii) doesn’t necessarily follow from ii). (iii) doesn’t follow from i) either.) ii) can be taken as an existential quantification over animals. iii), on the other hand, is an existential quantification over cats. Thus:

ii) ((Ǝx) (Ax)

iii) (Ǝx) (Cx))

Clearly, Ax and Cx are quantifications over different sets. It doesn’t follow, then, that what’s true of animals is also generally true of cats; even though cats are members of the set [animals]. Thus iii) doesn’t follow from ii).

To repeat: even though the premises and the conclusion are all true, the above still isn’t a valid argument. Read himself helps to show this by displaying an argument-form with mutually-exclusive sets — namely, [cats] and [dogs]. Thus:

i) All cats are animals

ii) Some animals are dogs

iii) Therefore some cats are dogs.

This time, however, the conclusion is false; whereas i) and ii) are true. It’s the case that the subset [dogs] belongs to the set [animals]. Some animals are indeed dogs. However, because some animals are dogs, it doesn’t follow that “some cats are dogs”. In other words, because dogs are members of the set [animals], that doesn’t mean that they’re also members of the subclass [cats] simply because cats themselves are also members of the set [animals]. Cats and dogs share animalhood; though they’re different subsets of the set [animal]. In other words, what’s true of dogs isn’t automatically true of cats.

The importance of sets, and their relation to subsets, may be expressed in terms of brackets. Thus:

[animals [[cats [[[cats with tails]]]]

not-[animals [[cats [[[dogs]]]]

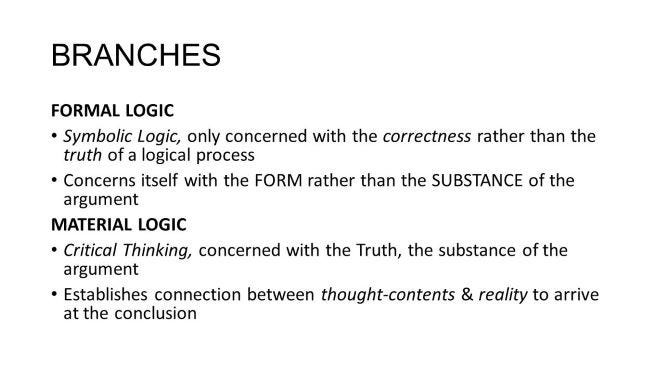

Material Validity and Formal Validity

Stephen Read makes a distinction between formal validity and material validity. He does so by using this example:

i) Iain is a bachelor

ii) So Iain in unmarried.

(One doesn’t usually find an argument with only a single premise.)

The above is materially valid because there’s enough semantic material in i) to make the conclusion acceptable. After all, if x is a bachelor, he must also be unmarried. Despite that, it’s still formally invalid because there isn’t enough content in the premise to bring about the conclusion. That is, one can only move from i) to ii) if one already knows that all bachelors are unmarried. We either recognise the shared semantic content or we know that the term “unmarried man” is a synonym of “bachelor”. Thus we have to add semantic content to i) in order to get ii). And it’s because of this that the overall argument is said to be formally invalid. Nonetheless, because of what’s already been said, it is indeed still materially valid.

The material validity of the above can also be shown by its inversion:

i) Iain is unmarried

ii) So Iain is a bachelor.

Read makes a distinction by saying that its

“validity depends not on any form it exhibits, but on the content of certain expressions in it”.

Thus, in terms of logical form, it’s invalid. In terms of content (or the expressions used), it’s valid. This means that the following wouldn’t work as either a materially or a formally valid argument:

i) Iain is a bachelor.

ii) So Iain is a footballer.

There’s no semantic content in the word “bachelor” that can be directly tied to the content of the word “footballer”. Iain may well be a footballer; though the necessary consequence of him being a footballer doesn’t follow from his being a bachelor. As it is, the conclusion is false even though the premise is true.

Another way of explaining the material (i.e., not formal) validity of the argument above is in terms of what logicians call a suppressed premise (or a hidden premise). This is more explicit than talk of synonyms or shared content. In this case, what the suppressed premise does is show the semantic connection between i) and ii). The actual suppressed premise for the above is the following:

All bachelors are unmarried.

Thus we should actually have the following argument:

i) Iain is a bachelor.

ii) All bachelors are unmarried.

iii) Therefore Iain is unmarried.

It may now be seen more clearly that

i) Iain is unmarried.

ii) So Iain is a bachelor.

doesn’t work formally; though it does work materially.

What about this? -

i) All bachelors are unmarried.

ii) So Iain is unmarried.

To state the obvious, this is clearly a bad argument. (It’s called an enthymeme.) Indeed it can’t really be said to be an argument at all. Nonetheless, this too can be seen to have a suppressed (or hidden) premise. Thus:

i) All bachelors are unmarried.

ii) [Suppressed premise: Iain is a bachelor.]

iii) So Iain is unmarried.

Now let’s take the classic case of modus ponens:

A, if A then B / Therefore B

That means:

A, if A is the case, then B is the case. A is the case. Therefore B must also be the case.

The obvious question here is: What connects A to B (or B to A)? In terms of this debate, is the connection material or formal? Clearly, if the content of both A and B isn’t given, then it’s impossible to answer this question.

We can treat the example of modus ponens above as having the aforesaid suppressed premise. Thus:

i) [Suppressed premise: Britain’s leading politician is the Prime Minister.]

ii) Boris Johnson is Britain’s leading politician.

iii) Therefore Boris Johnson is Britain’s Prime Minister.

In this instance, premises and conclusion are true. Yet i) is only contingently (i.e., not necessarily) connected to ii) and iii).

Finally, Stephen Read puts the formalist position on logic very clearly when he states the following:

“Logic is now seen — now redefined — as the study of formal consequence, those validities resulting not from the matter and content of the constituent expressions, but from the formal structure.”

We can now ask:

What is the point of a logic without material or semantic content?

If logic were purely formal, then wouldn’t all the premise and predicate symbols — not the logical symbols — simply be autonyms? (That is, all the p’s, q’s, x’s, F’s, G’s etc. would be purely self-referential.) So what would be left of logic if that were the case? Clearly we could no longer say that logic is about argumentation — or could we? Not really. The fact is that we can still learn about argumentation from schemas (or argument-forms) which are purely formal in nature. And that basically means that the dots don’t always — or necessarily — need to be filled in.